A Machine Learning Researcher's Guide to Multiomics Analysis

The world of bioinformatics is depressingly opaque, rife with obscure vocabulary, technical jargon, and the sometimes inscrutable argot that biologists use to communicate. This complexity is compounded by the sheer number of biological data types and experimental methodologies in existence, leaving data scientists scratching their heads as to which datasets to use and how to properly preprocess biological data for machine learning. Furthermore, a lack of good tutorials and educational resources presents a real barrier-to-entry for computer scientists and machine learning researchers looking to contribute to the field. In my experience, most of the available resources are geared towards classically trained biologists looking to adopt a data science skillset, leading to an overemphasis on explicating basic ML techniques such as PCA and Random Forests while failing to highlight the nuanced challenges and complexities inherent to working with biological data. This makes it difficult for those who already have the technical background to begin applying their skillset to biological data. Conversational tools such as ChatGPT are making the bioinformatics literature more accessible to the non-biologist, but sometimes it’s difficult to even discern where to start or what questions to ask.

One final confounder I’ve noticed in the process of scientific crossover is the fact that many of the tools for omics, pathway, etc. analysis are written in languages such as R and Perl that are not typically amenable to the average machine learning practitioner, who tends to work more in Python and C++. While such practitioners shouldn’t have any trouble picking up these languages per se, I think there’s an inherent mental resistance to having to learn and maintain a skillset in yet another tool. While some of these tools have been ported to Python, much of the example code and documentation remains in the original languages. This isn’t a dealbreaker by any means, but it adds another layer of mental fatigue and overwhelm to researchers looking to assimilate so much information as they break into the field.

From my own experience, I’ve observed that it’s much more common for machine learning people to gravitate towards the subfields of computational biology with more of a physics, or even chemistry, flavor (small-molecule drug discovery, virtual screening, molecular dynamics, protein structure prediction, medical imaging) while neglecting the subfields with a strong biological bent (sequencing and omics analysis, immunology, biological pathways and networks, genetics). This is a shame, because these areas are important and would undoubtably benefit from more computational approaches.1 Whether this clustering of computational folks within certain subdisciplines is due to a relative ease of knowledge transfer or simply an ideological preference is not totally clear, though I’d posit that it’s likely a bit of both.

I’m guilty of this inherent ideological bias myself. I’ve spent the majority of my PhD working on small-molecule drug discovery, molecular dynamics, and equivariance, because I found these topics to be the most mathematically interesting. Even in my work on biological pathways, I compartmentalized and abstracted away much of the biological knowledge. I now regret not having a deeper understanding of the biological picture, especially in light of recent research showing that some of the more mathematically interesting model priors (e.g. equivariance) can be eschewed at scale. I’m now working to address this by really focusing on developing my core biological knowledge base.

In this series of posts, I aim to condense some of my learnings to make it easier for other machine learning researchers to dive into the more classically biological subfields of bioinformatics. And nowhere is the complexity of biological data more pronounced than in the analysis and integration of multiomics datasets.

A Birds-Eye Overview of Omic Data

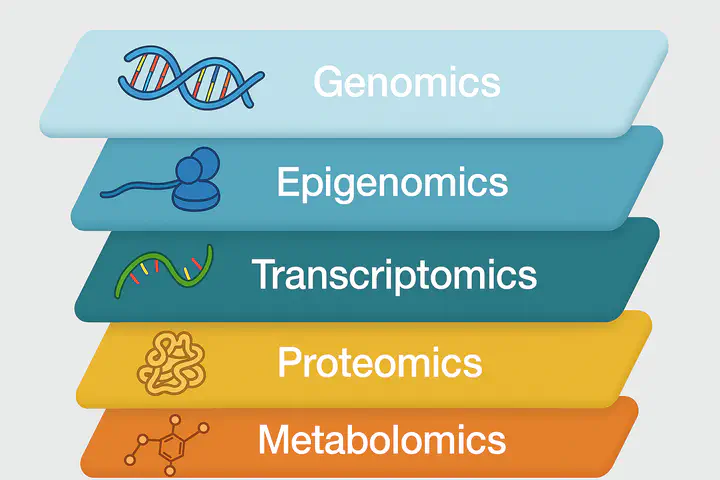

Omics data can be roughly grouped into five levels of granularity – genomics, epigenomics, transcriptomics, proteomics, and metabolomics. Each type of omics data gives us a different snapshot of a biological system. The type of data used depends on the problem being studied and the availability of different sequencing technologies.

Genomics: What’s Encoded

At the lowest (most granular) level, we have genomics data. Due to the popularity of publicly-funded research such as The Human Genome Project, this is the type of omics data with which the layperson is likely the most familiar. The genome is the genetic code of an organism, the DNA sequence of nucleotides (ACGT) that encodes an individual’s traits, features, and genetic makeup. Genomics data provides this data in a number of different formats – FASTQ files (raw sequence), BAM (aligned sequence), and VCF (sequence variants). When combined with an alignment to a reference genome, an individual’s genomic data gives a snapshot of their hardcoded genes, mutations, single nucleotide polymorphisms (SNPs), and structural variants. However, there’s often a large gap between what’s written in an individual’s genome and what’s ultimately expressed in their phenotype (observable physical characteristics). It is in the bridging of this gap where the other types of omics data come into play.

Genomics data is usually obtained via either whole-genome sequencing (WGS) or whole-exome sequencing (WES). Genomic data can be useful for, inter alia,

- Identifying heritable risk variants

- Diagnosing Mendelian disorders

- Understanding mutational burden in cancer.

Epigenomics: What’s Regulated

While DNA, as captured in genomics data, provides the hard-coded template for an individual’s physical characteristics and disease susceptibilities, it’s just that – a template. There are a number of steps that need to occur before genes, as encoded in DNA, are expressed in an individual’s phenotype. In fact, a large portion of the genome is noncoding, meaning it doesn’t directly code for any specific protein or trait. Yet, it’s suspected that much of noncoding DNA performs regulatory functions, rendering it far from unimportant.

The epigenome is the set of chemical modifications of DNA and associated proteins that control gene expression without altering the DNA sequence itself. You can think of the epigenome as “turning on” or “turning off” various parts of the genome via a variety of biologically-mediated processes such as DNA methylation and histone modification.

The assays involved in epigenomics capture the biological artifacts of these processes. One example is ChIP-Seq, which captures the binding sites of proteins that regulate the transcription of DNA and, ultimately, gene expression. Other useful assays include 450K/EPIC methylation arrays and ATAC-seq. They provide data to the user in the form of β-values, M-values, and IDAT files.

Ultimately, epigenomics data can be helpful for:

- Understanding gene regulation

- Capturing environmental effects on the genome

- Estimating biological age (epigenetic clocks) However, it’s important to note that epigenomic data is context-dependent, tissue-specific, and sensitive to sample quality.

Transcriptomics: What’s Transcribed

Transcriptomics is the study of the biological products of DNA transcription, namely mRNA. Transcription is the process by which DNA is expressed. Loosely speaking, the central dogma of biology is “DNA makes RNA makes protein.” This flow of biological information is unidirectional. Thus, only the parts of DNA that are transcribed may ultimately make their way into coding for a downstream protein product. Of course, in reality, the picture is significantly more complex. For example, there are various types of non-coding RNA, such as lncRNA and miRNA, which may have modulatory functions but don’t create actual gene expression.

The most prevalent transcriptomic methods are RNA-seq and, more recently, scRNA-seq. Both provide a measure of gene expression, with scRNA-seq measuring gene expression within a single cell. This data can be used to perform analyses such as differential expression, showing how gene expression levels differ across conditions or treatments. Differential expression analyses can play a crucial role in drug discovery programs and precision medicine efforts.

Ultimately, transcriptomics data is useful for tasks such as:

- Identifying differentially expressed genes

- Clustering disease subtypes

- Inferring pathway activity and cell types

Proteomics: What’s Built

Proteomics is the study of proteins, the macromolecules comprised of long sequences of amino acids that perform the majority of a cell’s functions. While proteomics is more informative than lower levels of omics analyses such as genomics, it’s also significantly more difficult in practice. This is because protein expression is subject to a number of environmental conditions, time at measurement, and post-translational modifications. Proteomics data is typically represented as protein IDs with intensity/abundance values and can be measured using techniques such as mass spectrometry, gel electrophoresis, protein identification technology, and stable isotope labeling with amino acids in cell culture.

Ultimately, proteomics can be useful for:

- Identifying biomarkers

- Measuring active signaling pathways

- Understanding post-translational modifications such as phosphorylation

Metabolomics: What’s Happening

Finally, metabolomics is the study of small-molecule metabolites such as glucose or neurotransmitters. Data typically gives the concentrations of named metabolites and can be acquired via techniques such as LC-MS, GC-MS, and NMR Spectroscopy. Metabolomics is relatively less common than other omics methods, though it still has a place within a comprehensive omics pipeline. However, it is highly sensitive to diet, stress, and collection conditions, making it less precise than other methods.

Broadly speaking, metabolomics is useful for:

- Capturing the functional state of a cell or tissue

- Identifying metabolic dysregulation

- Linking genotype to phenotype

What Are Batch Effects?

Any bioinformatics researcher will tell you that working with biological data can be difficult because the data is often confounded by batch effects. A batch effect is an unwanted source of variation in biological data that is not related to the biological variable of interest (e.g., disease vs. control), but instead to technical or procedural differences during data collection or processing. Common sources of batch effects include the lab/technician handling samples, the date and time at which data was generated, differences in equipment such as the specific sequencer used, different chemical batches (reagent lots), the center or hospital at which a study was performed, and even the layout of sample plates (e.g. the edge vs. center of a microarray slide).

Often, statistical and machine learning algorithms may pick up on a signal related to underlying batch effects rather than any true biological signal. This makes removing batch effects from data crucial to getting a useful result from a model. Here are a few common methods that researchers may use to correct for batch effects.

| Method | Description |

|---|---|

| Combat (sva package) | Empirical Bayes adjustment for batch |

| limma::removeBatchEffect | Linear modeling-based correction |

| Harmony / Scanorama | For single-cell or high-dimensional integration |

| Mixed-effect models | Treat batch as a random effect in modeling |

| Include batch as a covariate | In differential expression analysis |

Conclusion

This broad overview barely scrapes the surface of omics technology, but hopefully it gives researchers from adjacent fields a framework for understanding biological data at various levels. In future posts, I hope to dive deeper into each type of omics data individually and run through example analyses using each data type. Stay tuned!

Note, this is true in the reverse as well. There’s no reason that fields such as molecular dynamics should be the exclusive domain of physicists and computational chemists. For example, a properly-guided biologist could provide great insight to MD researchers who’ve developed “tunnel vision” around improving the narrow, technical aspects of simulations without stepping back to consider how they fit into the big picture biologically. ↩︎