The Universal Approximation Theorem for Neural Networks

In 1989, Hornik, Stinchombe, and White published a proof of the fact that for any continuous function $f$ on a compact set $K$, there exists a feedforward neural network, having only a single hidden layer, which uniformly approximates $f$ to within an arbitrary $\varepsilon > 0$ on $K$. I present here an elegant proof of the same fact given by Cybenko. In my exposition, I’ll attempt to clear up some points of confusion which I encountered on my first read through as well as clarify some of the finer mathematical details that Cybenko glossed over in his paper. That said, the crux of the the theorem is simple, and begins with a few definitions. As I proceed through the proof, I’ll try to bolster the intuition behind it. Knowledge of measure theory and functional analysis is ideal for understanding the details of the proof, although it might be possible to grasp the general argument given a strong background in elementary real analysis.

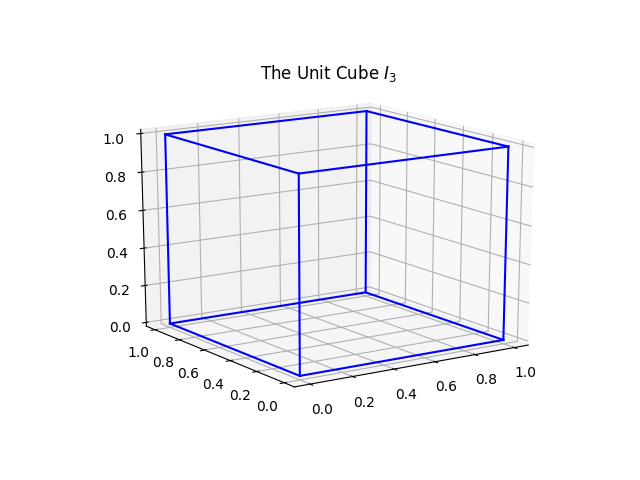

By $I_n$, we denote the $n$-dimensional unit cube which we can represent as a Cartesian product of unit intervals $[0, 1]^n$.

- $\mu(K) < \infty$ for all compact sets $K$

- $\mu(E) = \inf{\mu(U) : E \subseteq U, U \text{ open } }$

- $\mu(E) = \sup{\mu(K) : K \subseteq E, K \text{ compact} }$

Intuitively, a single measure imposes some notion of size or volume on a set. While different measures may define different sizes for a single set, they all ideally convey some idea of how much space that set takes up relative to the larger space in which it resides. All the integration to be done throughout this proof will be with respect to regular measures on $C(I_n)$. Why is this important? For one thing, as their name suggests, regular measures exhibit a certain structural “regularity”. They behave in the way that, in some sense, one would intuitively expect. For example, the measure of a compact set, has finite measure when the measure being applied is regular. This makes sense. In a metric space, compact sets are closed and bounded, and it would be weird for a set $K$ to be compact yet somehow take up infinite space. Similarly, if we are measuring a set $E$ and we take the lower bound on the measure of open sets subsuming $E$, we should get the measure of $E$. The same goes for when we approximate $E$ by compact sets from below. Another reason for restricting ourselves to regular measures is that this will be a prerequisite for invoking the Riesz Representation Theorem in our proof. More on this in a bit.

Now, recall the uniform norm of a function $f:A \to B$, $$ |f| = \sup {|f(x)| : x \in A } $$ In other words, the uniform norm gives an upper bound on all possible values of $f$, and for two functions $f, g$ we see that $| f - g |$ gives a uniform bound on the extent to which $f$ and $g$ differ from one another. What we aim to show is that the set of feedforward neural networks is dense in $C(I_n)$ with respect to the uniform norm. A set $A$ is dense in $X$ if $\overline{A} = X$ (the closure of $A$ is the entire space $X$). Since we are working in a metric space where we have a notion of distance between functions (here the metric is the uniform norm), we can define “denseness” in more intuitive terms. In this case, we say $A$ is dense in $X$ if for every $x \in X$ there exists a sequence $(a_n)_{n \in \mathbb{N}} \in A$ such that $\lim_{n \to \infty} a_n = x$. With this in mind, we can reformulate the guarantee of the uniform approximation theorem in a few different ways:

- The set of all feedforward neural networks $\mathcal{N}$ is dense in $C(I_n)$.

- For every continuous function $f \in C(I_n)$, there exists a sequence of neural networks $(n_j) \in \mathcal{N}$ converging to $f$, i.e. $\lim_{j \to \infty} n_j = f$.

- For every continuous function $f \in C(I_n)$ and $\varepsilon > 0$ there exists a neural network $g \in \mathcal{N}$ such that $|g - f| < \varepsilon$.

That last formulation may be the most intuitive. Effectively, it’s saying that you can specify an arbitrary precision $\varepsilon$ to within which you’d like to approximate a continuous function $f$ on $I_n$ and a neural network exists that will accomplish this. Of course, the proof gives us no way to go about explicitly constructing or finding such a neural network, but hopefully the guarantee still provides you with some theoretical peace of mind. Having formulated our problem, we begin by giving an explicit mathematical definition of a feedforward neural network.

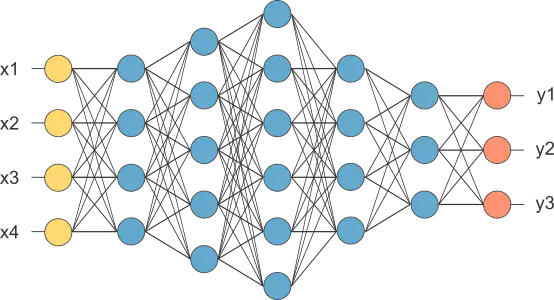

Definition: A feedforward neural network having $N$ units or neurons arranged in a single hidden layer is a function $y : \mathbb{R}^d \to \mathbb{R}$ of the form $$y(\mathbf{x}) = \sum_{i=1}^N \alpha_i \sigma \left(\mathbf{w}_i^{T}\mathbf{x} + b_i \right)$$ where $\mathbf{w}_i, \mathbf{x} \in \mathbb{R}^d$, $\alpha_i, b_i \in \mathbb{R}$, and $\sigma$ is a nonlinear sigmoidal activation function. The $\mathbf{w}_i$ are the weights of the individual units and are applied to the input $\mathbf{x}$. The $\alpha_i$ are the network weights and are applied to the output of each unit in the hidden layer. Finally, $b_i$ is the bias of unit $i$. In effect, a neural network of this sort is just a linear combination of affine transformations of its inputs under sigmoidal activations. By an affine transformation, we mean the $\mathbf{w}_i\mathbf{x} + b_i$ part of the definition. But what’s a sigmoidal activation function?

Definition: A sigmoidal activation function $\sigma : \mathbb{R} \to \mathbb{R}$ satisfies $$\sigma(t) \to \begin{cases} 1 & \text{ as } t \to \infty \ 0 & \text{ as } t \to -\infty \end{cases}$$ In other words, as $t$ gets exponentially large, $\sigma(t)$ limits towards 1, and as $t$ gets exponentially negative, $\sigma(t)$ limits towards 0.

Pictured above is the softmax function, one example of a sigmoidal function. As $x, y$ tend to $-\infty$ we see that $z$ tends to 0. Conversely, if $x,y$ tend to $\infty$, $z$ tends to 1.Definition: We say $\sigma$ is discriminatory if for $\mu \in M(I_n)$ and $$\int_{I_n} \sigma(\mathbf{w}_i^T\mathbf{x} + b_i)\ d\mu(\mathbf{x}) = 0$$ for all $\mathbf{w}_i \in \mathbb{R}^d, b_i \in \mathbb{R}$ then $\mu = 0$.

This definition tells us something interesting about discriminatory $\sigma$. In some sense, a discriminatory $\sigma$ is volumetrically non-destructive when it acts on linear transformations of input. That is, the definition tells us that for nonzero $\mu$, there exist $w_i, b_i$ such that

$$\int_{I_n} \sigma(\mathbf{w}_i^T\mathbf{x} + b_i)\ d\mu(x) \neq 0$$ In other words, the two opposing limit properties of $\sigma$ prevent it from “losing” the information conveyed in the weighted-then-shifted input $\mathbf{x}$ by, for example, ensuring that it can’t send the affine space $\mathbf{w}_i\mathbf{x} + b_i$ to a set of measure zero.

We are now ready to present the Universal Approximation Theorem and its proof.

Theorem 1 If the $\sigma$ in the neural network definition is a continuous, discriminatory function, then the set of all neural networks is dense in $C(I_n)$.

Proof: Let $\mathcal{N} \subset C(I_n)$ be the set of neural networks. As mentioned earlier, $\mathcal{N}$ is a linear subspace of $C(I_n)$. To prove that $\mathcal{N}$ is dense in $C(I_n)$, we will prove that its closure is $C(I_n)$. By way of contradiction, suppose that $\overline{\mathcal{N}} \neq C(I_n)$. Then $\overline{\mathcal{N}}$ is a closed, proper subspace of $C(I_n)$.

Now, let’s take a quick digression here to review the statement of the Hahn-Banach Theorem.

Hahn-Banach Theorem Let $X$ be a real vector space, $p$ a sublinear functional on $X$, $M$ a subspace of $X$, and $f$ a linear functional on $M$ such that $f(x) \leq p(x)$ for all $x \in M$. Then there exists a linear functional $F$ on $X$ such that $F(x) \leq p(x)$ for all $x \in X$ and $F|M = f$.

For us, the Hahn-Banach Theorem gives us a way to extend a bounded linear functional defined on $\mathcal{N}$ to one defined on all of $C(I_n)$ in a way that will eventually allow us to derive a contradiction. By the Hahn-Banach Theorem, there exists a bounded linear functional on $C(I_n)$, call it $L$, such that $L(\mathcal{N}) = L(\overline{\mathcal{N}}) = 0$ but $L \neq 0$. Recall that a linear functional is just a linear map from a vector space $X$ to $A$ where $A = \mathbb{R} \text{ or } \mathbb{C}$. It should be obvious that $\int_{I_n}$ acts as a linear functional on $C(I_n)$ (if it’s not, consider the domain and codomain of Lebesgue integration). Now we give the statement of the Riesz Representation Theorem.

Riesz Representation Theorem If $I$ is a positive linear functional on $C_c(X)$, there is a unique Radon measure $\mu$ on $X$ such that $I(f) = \int f\ d\mu$ for all $f \in C_c(X)$. Moreover, $\mu$ satisfies $$\mu(U) = \sup{I(f) : f \in C_c(X), 0 \leq f \leq 1, \text{supp}(f) \subset U}\text{ for all open } U \subset X$$ and $$\mu(K) = \inf{I(f) : f \in C_c(X), 0 \leq f \leq 1, f \geq \chi_K}\text{ for all compact } K \subset X$$ where $C_c(X)$ denotes the functions of compact support defined on $X$ and $\chi_K$ is the indicator function of the compact set $K$.

Thankfully, in our case $C(I_n)$ is $\sigma$-compact so instead of dealing with Radon measures, we can restrict ourselves to the regular Borel measures introduced earlier. By the Riesz Representation Theorem, we can write the functional $L$ in the following form $$L(h) = \int_{I_n} h(x)\ d\mu (x)$$ for some $\mu \in M(I_n)$, for all $h \in C(I_n)$. In particular, since by definition any neural network is a member of $\mathcal{N}$, and $L$ is identically zero on $\mathcal{N}$, we have $$\int_{I_n} h(x)\ d\mu (x) = 0$$ Since we took $\sigma$ to be discriminatory, we must have $\mu = 0$. But this contradicts our determination that $L \neq 0$, because $$\mu = 0 \implies \int_{I_n} h(x)\ d\mu(x) = 0 \quad \text{ for all } h \in C(I_n)$$ Therefore, $\mathcal{N}$ is dense in $C(I_n)$. $\square$

We have arrived at our desired result, but it all hinges on our assumption that the sigmoid function $\sigma(t)$ is discriminatory, a fact that we have not yet proved. Let’s do that now.

Lemma Any bounded, measurable sigmoidal function $\sigma$ is discriminatory. In particular, any continuous sigmoidal function is discriminatory.

Proof: For any $x, y, \theta, \varphi$ we have $$\begin{align*} \sigma_{\lambda}(x) = \sigma(\lambda(y^Tx + \theta) + \varphi) &= \\ &\begin{cases} \to 1 & \text{ for } y^Tx + \theta > 0 \text{ as } \lambda \to \infty \\ \to 0 & \text{ for } y^Tx + \theta < 0 \text{ as } \lambda \to \infty \\ = \sigma(\varphi) & \text{ for } y^Tx + \theta = 0 \text{ for all } \lambda \end{cases} \end{align*}$$ This can be seen by applying the properties of the sigmoidal function to its input for varying values of $\lambda$. In other words, as $\lambda \to \infty$ for $y^Tx + \theta > 0$ we are in essence calculating $\sigma(t)$ for $t \to \infty$. Similarly, as $\lambda \to \infty$ for $y^Tx + \theta < 0$ we get $\sigma(t)$ for $t \to -\infty$. The third case is obvious.

Thus the functions parameterized by $\lambda$, $\sigma_{\lambda}(x)$ converge pointwise and boundedly to $$\begin{align*} \gamma(x) = \begin{cases} 1 & \text{ for } y^Tx + \theta > 0 \ 0 & \text{ for } y^Tx + \theta < 0 \ \sigma(\varphi) & \text{ for } y^Tx + \theta = 0 \ \end{cases} \end{align*}$$ as $\lambda \to \infty$. This follows directly from the above.

Let $\Pi_{y, \theta} = {x | y^Tx + \theta = 0}$ be an affine hyperplane and let $H_{y, \theta}$ be the open half-space defined by ${y^Tx + \theta > 0}$. Note that $|\sigma_\lambda(x)| \leq \max(1, \sigma(\varphi))$ for all $x$. Therefore, we can apply the Dominated Convergence Theorem to get $$\begin{align*} \lim_{\lambda \to \infty} \int_{I_n} \sigma_{\lambda}(x)\ d\mu(x) &= \int_{I_n} \lim_{\lambda \to \infty} \sigma_{\lambda}(x)\ d\mu(x) \\ &= \int_{I_n} \gamma(x)\ d\mu(x) \\ &= \sigma(\varphi)\mu(\Pi_{y, \theta}) + \mu(H_{y, \theta}) \end{align*}$$ for all $\varphi, \theta, y$.

We want to show that if the above integral is equal to zero, then $\mu$ is forced to be 0. Equivalently, we must show that the measure of all half-planes being 0 implies that the measure $\mu$ equals 0. To do this, we need to fix $y$. For a bounded, measurable function $h$, define a linear functional $F$ $$F(h) = \int_{I_n} h(y^Tx)\ d\mu(x)$$ Note that $F$ is a bounded linear functional on $L^{\infty}(\mathbb{R})$ since $\mu$ is a finite signed measure. This is because when we integrate with respect to a finite measure, we can’t get an infinite result. Let $h$ be the indicator function for the interval $[\theta, \infty)$ so that $$F(h) = \int_{I_n}h(y^Tx)\ d\mu(x) = \mu(\Pi_{y, -\theta}) + \mu(H_{y, -\theta}) = 0$$ To see why this is true, recall that the indicator function $\chi_A:X \to {0, 1}$ for a set $A \subseteq X$ is defined as follows $$\chi_A(x) = \begin{cases}1 & \text{ if } x \in A \\ 0 & \text{ otherwise }\end{cases}$$ Thus, if $y^Tx \in [\theta, \infty)$, then $y^Tx - \theta \geq 0$. For the integral, this decomposes into the measure of two disjoint sets, the hyperplane $\Pi_{y, -\theta} = {x : y^Tx - \theta = 0}$ and the half-space $H_{y, -\theta} = {x : y^Tx - \theta > 0}$. Similarly, $F(h) = 0$ if $h$ is the indicator function for the open interval $(\theta, \infty)$. By linearity, $F$ is 0 for the indicator function on any interval and hence for any simple function. Simple functions are dense in $L^{\infty}(\mathbb{R})$, so $F = 0$.

In particular, the bounded, measurable functions $s(u) = \sin(m \cdot u)$ and $c(u) = \cos(m \cdot u)$ give $$\begin{align*} F(s + ic) &= \int_{I_n} \cos(m^Tx) + i\sin(m^Tx)\ d\mu(x) \\ &= \int_{I_n} e^{im^Tx}\ d\mu(x) \\ &= 0 \end{align*}$$ for all $m$. Thus, the Fourier transform of $\mu$ is 0 and so $\mu$ must be zero as well. Therefore $\sigma$ is discriminatory. Here we chose a specific function $h$ which drives the measure $\mu$ to zero. The Fourier transform is a clearly reasonable choice, although it seems apparent to me that other suitable functions exist.